Revisiting Engelbart in 2026

A framework to understand the far-reaching effects of AI.

About#

Every modern experience of using a computer, like having a graphical user interface, pointing things with a mouse, a rich text editor, and even online collaboration, can trace its origins back to Douglas Engelbart's philosophies from the early 1960s. These are the great inventions that withstood the trial of time (60+ years!).

Great as these inventions are, they are only the secondary derivatives of Engelbart’s vision, which can all be rooted back to this thesis published in 1960, Augmenting Human Intellect: A Conceptual Framework.

Part of the thesis analyzes how humans solve problems and how we can systematically improve human intellect. The other part studies how innovations change not just how we do things, but also how we think and what we can do. In other words, the thesis is fundamentally about inventions and the positive feedback loop between humans and their inventions.

In this article, I’ll try to restate some of the most profound ideas of the thesis using today’s words, and then offer some modern examples like how Heptabase and Notion helped us unlock new ways of thinking. After that, I’ll apply the same lens to attempt to describe how AI impacts software industry, and helps humanity unlocks new ways of thinking. And finally, I’ll discuss the idea of an agent-enhanced intellectual system, H-ALAM/T.

I currently believe Engelbart’s framework is the best framework to help us understand the impacts and potential of AI for knowledge workers, but maybe we can even bring it forward and shift to an AI-centered intellectual system.

Part of the motivation for writing this article is because I noticed that I'm very excited by the expansion of human capability, i.e., tools that create a qualitative shift in what a person can think and do, not just a quantitative improvement in efficiency. Today, I have increasingly observed that the leverage comes from creating the right interface between human, software, and AI. So this study lies in my attempt to understand myself better, to understand what resonates with me deeply, and furthermore, to understand what is worth building.

Note: In this article, AI, Agents and LLM are used interchangeably. Their difference doesn’t matter in this context.

H-LAM/T systems#

As human population grows, society gets more complicated, problems get bigger. People had a hard time solving problems.

Humans can’t solve hard problems alone, but they can when a trained (T) group of human (H) think with the right abstractions (L, languages), are armed with special man-made tools (A, artifacts), use the right methodologies (M). This composite system is what Engelbart called an H-LAM/T system. An H-LAM/T system is the basic unit of any intellectual activity that tackles problems.

If we think of intelligence as the ability to solve problems, then a pen is an “intelligence amplifier”, because it allow us to solve more problems than without it. By the same token, a scheduling tool like gantt chart (Artifact) is an intelligence amplifier, so are terms like "user story", "OKR", and "north star metric" (Language), practices like prioritization frameworks and stakeholder reviews (Methodology), and years of talking to customers and making trade-off decisions (Training). The study of Augmenting Human Intellect is the system study of improving these individual aspects.

Where does intelligence come from? To answer this question, we can look at how a computer solves a complicated mathematical problem. At the top level, the process looks simple: a user enters a formula and receives an answer. But beneath that lies a hierarchy of sub-processes: solving the problem programmatically through an algorithm that parses, transforms, and evaluates the expression step by step. Beneath that is the process of running the compiler, which translates those high-level instructions into machine code, sequences of primitive operations like comparisons, memory lookups, and arithmetic operations. And beneath even that, each operation reduces to logic gates flipping bits, governed ultimately by the physics of electrons moving through silicon. Can we say intelligence happens at one of the layers? We cannot, because each layer is only a mechanical transformation of the one below it, and individually, it doesn’t solve our problem. Our problem is only solved when we organize the different layers in a useful way, such that the organization produces effects greater than the sum of their individual parts. In other words, intelligence comes from a process hierarchy that can solve a given problem.

To really solve a given problem, for each process, we need a capability that can execute it. For example, to solve 2×2, which is a process, we need more than just "the capability to do arithmetic." We need the capability to recognize the notation, to understand that "×" denotes multiplication. We need the capability to know what multiplication means, that it is repeated addition. We need the capability to perform addition itself, computing 2+2. And we need the capability to hold quantities in memory so that intermediate results aren't lost. Each of these capabilities corresponds to a sub-process in the act of solving 2×2, and none of them alone produces the answer. Since a sophisticated problem can be broken down into a process hierarchy, if we can find a capability hierarchy to execute the matching process, the problem can then be solved.

Capability Innovation#

Now, what happens when an innovation in a particular capability is made? Engelbart argues that it will “have a far-reaching effect throughout the rest of one’s capability hierarchy”, because a change can propagate up to those higher-level capabilities that utilized it, and the changes from those higher-level capabilities can then propagate down to those beneath it, enabling latent capabilities. A change in capability can also restructure the entire process tree.

A simple example would be the "capability to correct a typo" while writing. Let's discuss how this capability propagated up to restructure the process of "writing an essay" and how it propagated down to enable the latent capability of "developing multiple ideas simultaneously."

With traditional typewriters, the process of correcting a typo resembles this: you paint a thin layer of correction fluid over the mistake, wait for a few minutes for it to dry, continue typing above the white fluid, then (maybe) retype the entire thing again. With the capability to correct what you wrote easily, the process became: delete the typo, type again. This capability will propagate up to change your process of writing essays, because it’s a higher level process of that utilizes“correcting a typo”. Before this capability, the process hierarchy of writing an essay resembles: draft an outline on paper with pencil, type the essay, fix mistakes, retype the entire thing again, publish. Now, with this new capability, your process of writing might resemble: type as you think, change the structure as you have new ideas, develop multiple ideas at the same time, pick a branch, simplify, fix errors, publish. The structure of the process hierarchy is changed.

Unlocking new ideas#

Changes in our tools don't only change how we do things, they also change how we think and what we can think about.

Note how the capability of “developing multiple ideas at the same time” and “simplifying after idea development” wasn’t really usable before, because the cost of changes was too expensive. They are the latent capabilities that are enabled indirectly by the capability of “correcting a typo”. Upward and downward propagations from low-level capabilities have a lot of possibilities.

modern text editor vs typewritter

modern text editor vs typewritter

The Neo-Whorfian hypothesis and Co-Evolving with tools#

A hard problem is often cross-disciplinary, which means solving a hard problem often requires synthesizing information and collaboration at scale. A solution to a hard problem is the natural product of a well-organized concept (mental) structure, represented in symbol structures (words, diagrams).

Yet, the intellectual activity of any group of people is limited by their ability to manipulate symbols. For example, if writing were physically harder, then our civilization would have produced fewer physical records, and we would have developed different concepts, different notations, different languages, and different social structures.

If the ideas we can have depend on our ability to manipulate concepts and symbols, and our ability to manipulate symbols depends on our methods and tools, and our ability to create tools depends on our ideas, then it means if we invent better tools, we can manipulate symbols better; if we can manipulate symbols better, then we can have new ideas; if we can have new ideas, we can invent better tools. It's an exciting self-reinforcing loop! Engelbart believes there are powerful ideas that we can only discover once we've built more powerful languages, artifacts, and methodologies.

Computers are symbol-manipulating machines; they can manipulate symbol structures at scale efficiently. So Engelbart argued that we should invest in developing computer-aided tools and methods and familiarize knowledge workers with these systems. He further argued that we should start by experimenting whether this self-reinforcement loop is real. If it is real, then we should (1) invent better tools to help us get better at getting better (inventing tools) and (2) apply the methods of self-improvement to help other problem solvers unlock new ways of thinking and new ideas.

An analysis of modern productivity tools#

Philosophically, Heptabase and Notion as products are the explicit heirs of Engelbart. They took the ideas of systematically improving H-LAM/T to heart and gave their users the capabilities to do things previously unavailable, while unlocking new ways of thinking.

I will try to introduce the artifacts they offer simply, then provide a taste of the capabilities they offer, how it propagates up to change your higher-level processes, what latent capabilities it unlocked, and finally how it changed the way we think and what new ideas might get unlocked.

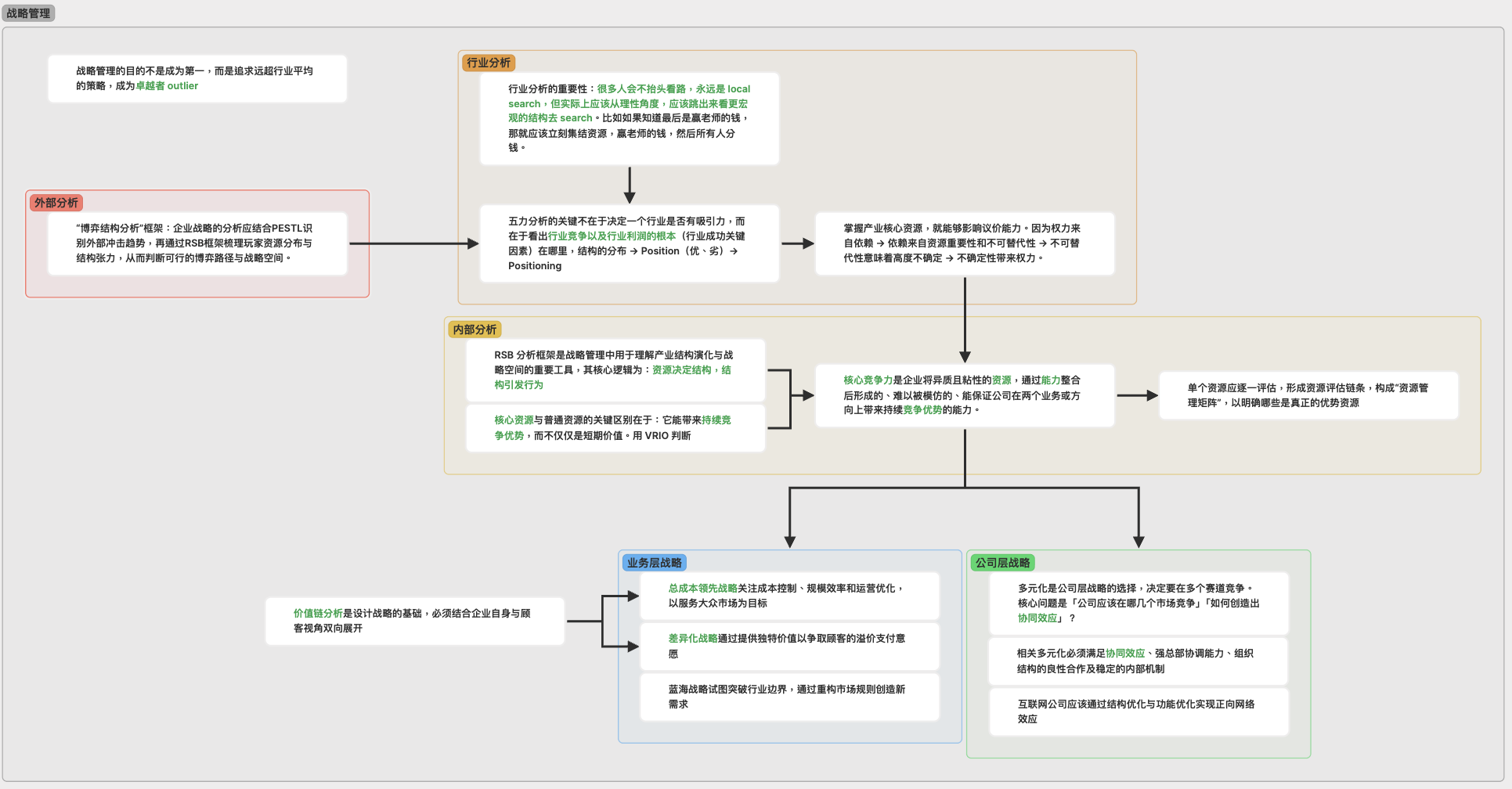

Let’s start with Heptabase. On the most superficial level, Heptabase offers whiteboards that let you manipulate cards on it. A card can be a document, a note, a video, a math formula, an image, a web-page, a chat dialogue, etc. It offers low level capabilities like re-using cards in different whiteboards, rearranging cards on the canvas, embedding cards into another card, connecting cards using arrows on a whiteboard, a journal page to capture fleeting thoughts, etc.

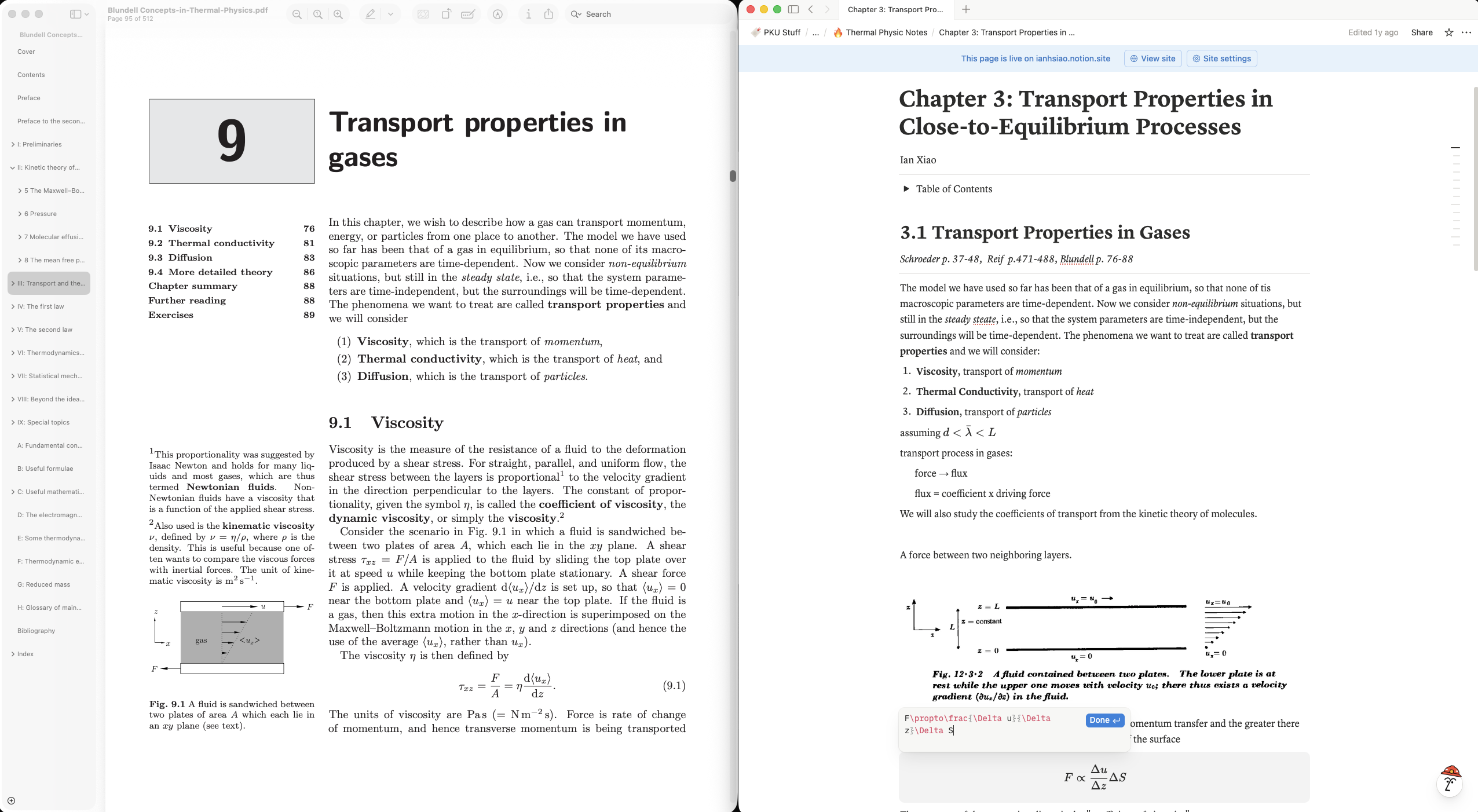

The higher-level process Heptabase is trying to help is to understand complex subjects, so before we inspect how the low-level capabilities propagate up and change how you learn, we should first inspect the typical process of learning complex subjects today using a computer or a tablet. You'd open the materials on one side, and on the other tab a notebook. After that, you’d read the material, add some notes, repeat, and stop.

taking digital notes the old fashion way

taking digital notes the old fashion way

After getting familiar with Heptabase, you’d use the aforementioned capabilities to learn in the following subprocesses: You’d open the materials on one side, and on the other tab a card creator, after that, you create a new note, summarize the content into one sentence, repeat, then put all of them on a whiteboard, move them around and connect them using arrows, rephrase the summaries, repeat. In other words, the low level capabilities propagated up and changed your learning processes.

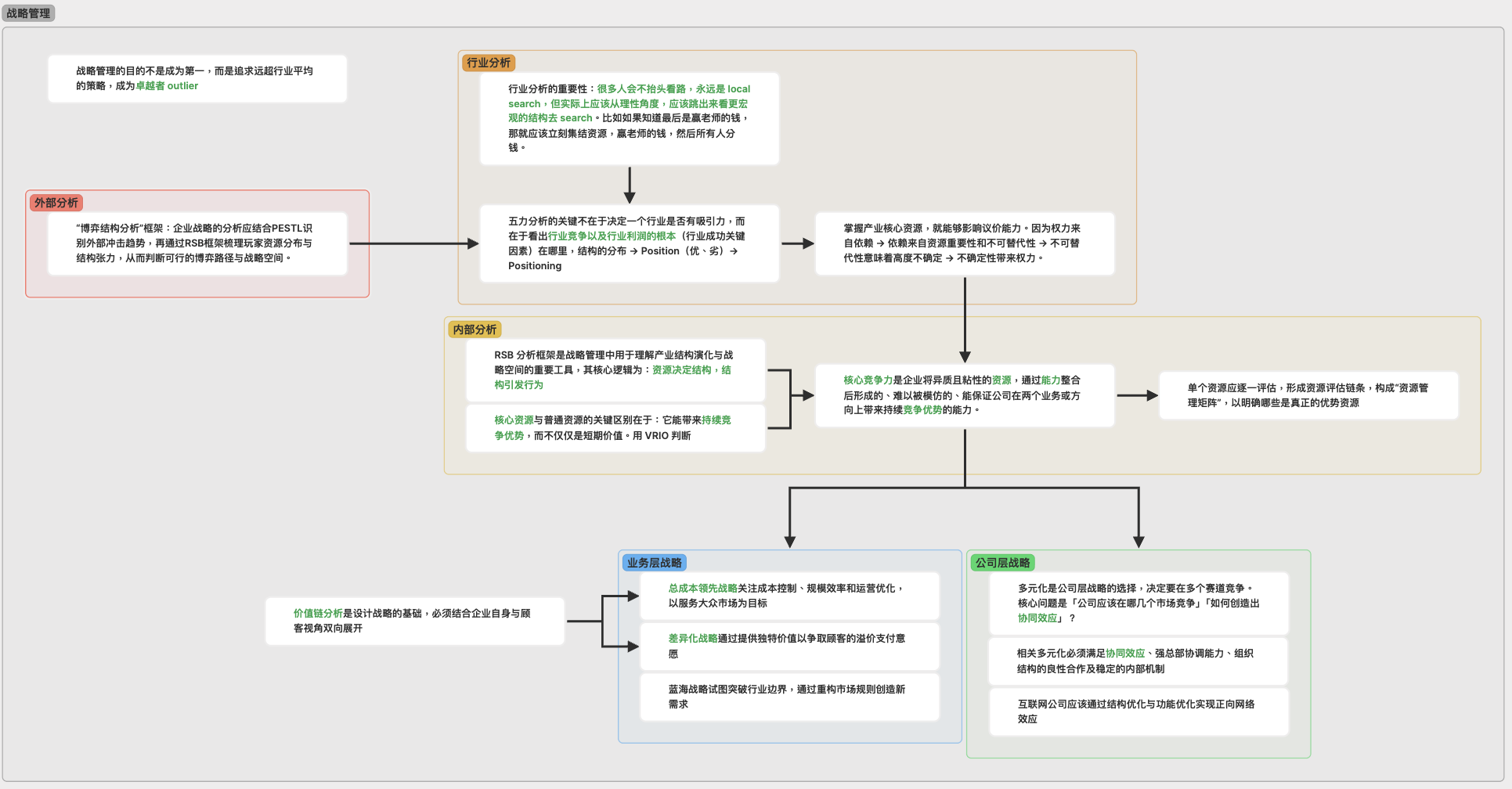

reorganizing core ideas from a 12 lectures series

reorganizing core ideas from a 12 lectures series

Notice that even though you could've (1) reorganized the notes to better reflect the nature of the knowledge, and (2) summarized your understanding of the knowledge you just consumed in the typical way of note-taking, you almost never do that, because (1) it's hard to reflect the structure of the knowledge in linear form, and (2) it's not intuitive to separate summary and content. In other words, with the low-level capabilities to manipulate cards on a whiteboard, we unlocked the latent capabilities to organize the structure of knowledge and synthesize it in a previously unavailable way.

Furthermore, the process of comparing ideas from different sources also became easy due to the capability or re-using cards. You can synthesize ideas from different sources easily.

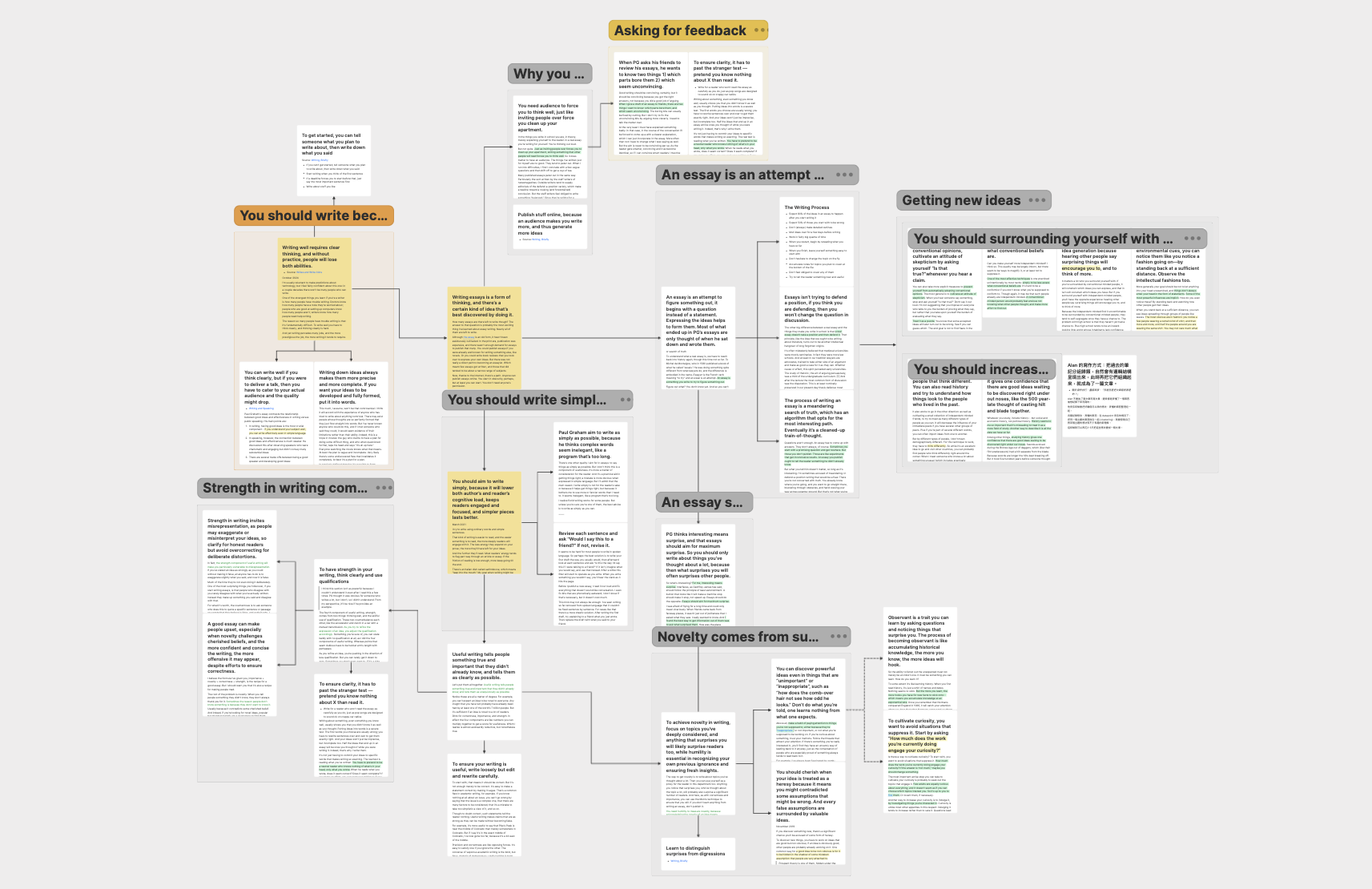

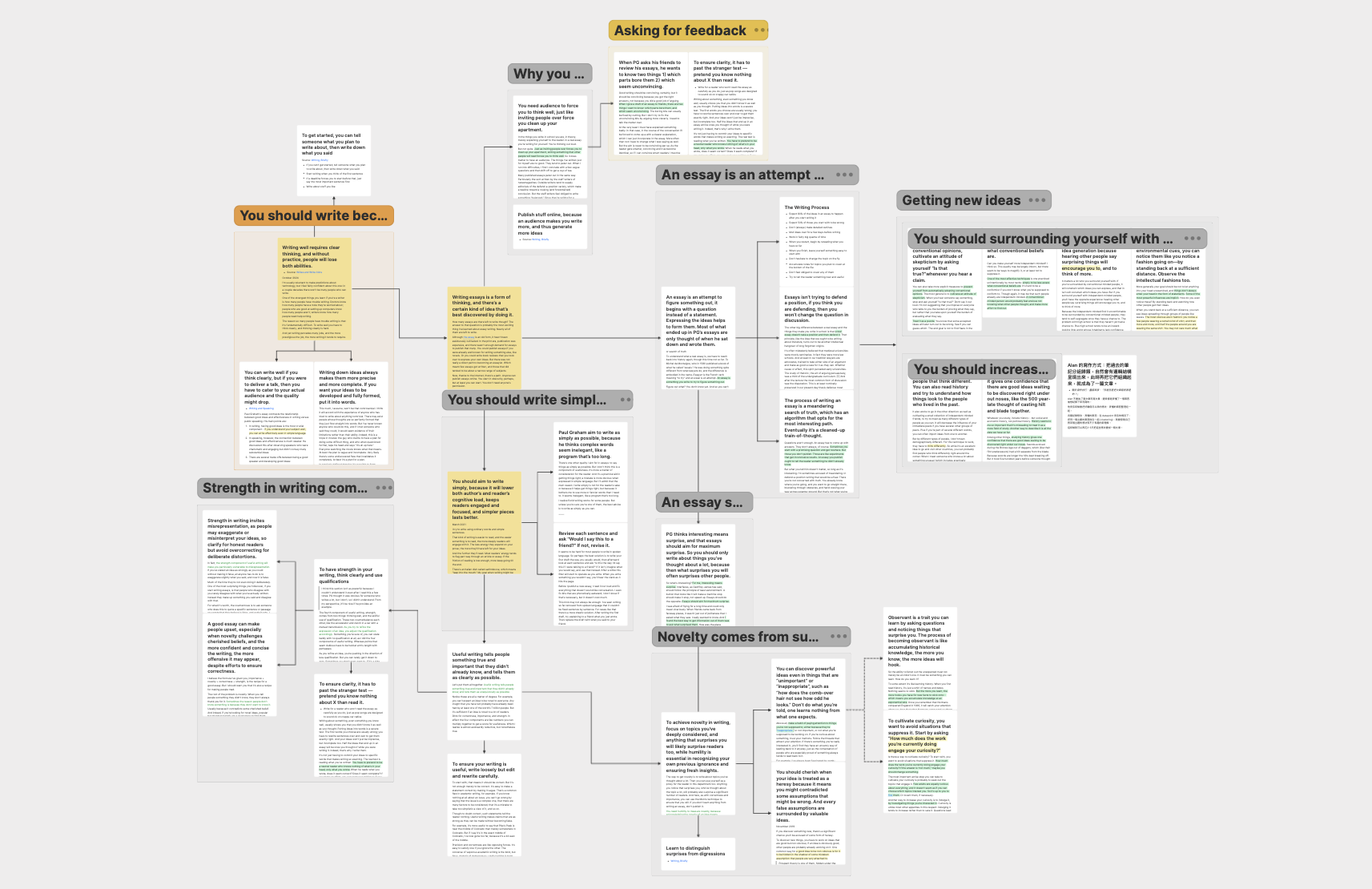

Combining ideas from 11 Paul Graham essays about "how to write"

Combining ideas from 11 Paul Graham essays about "how to write"

You started to think differently. After getting used to the concepts of synthesizing, organizing, and reusing knowledge, you start to ask different questions when you approach new ideas and knowledge domains. Instead of asking shallow questions like "What are the takeaways?" and "What are the interesting concepts?", you begin asking more contextualized questions. For a new topic, you'd start to ask "What are the threads of ideas that are important in this topic?"; for an individual new piece of knowledge, you'd ask "How does this new piece of knowledge fit into my existing knowledge threads?", "What can I learn from comparing these old and new concepts together?"

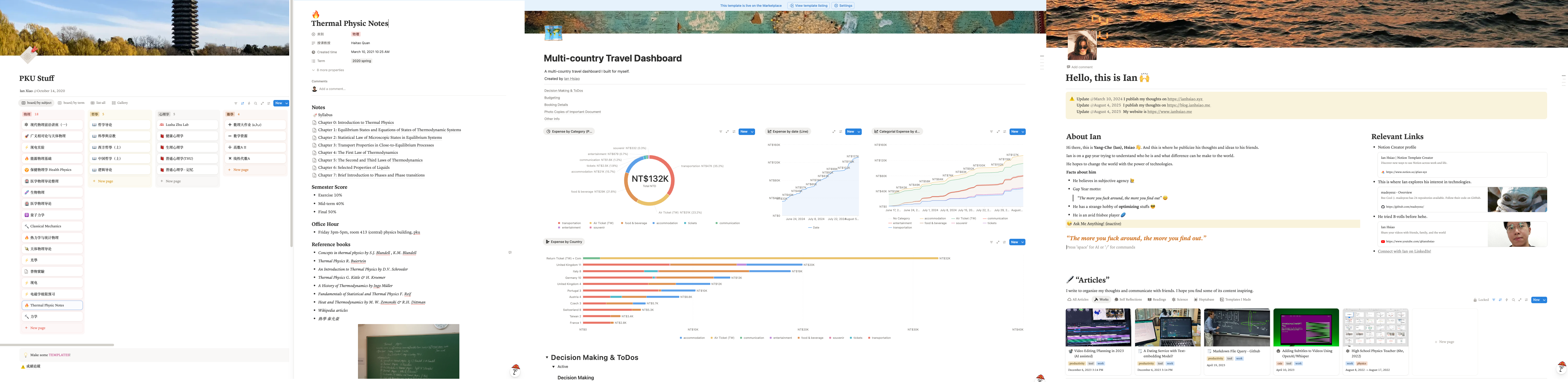

Similar things happen when you are familiar with Notion. The fundamental capability Notion offers is the capability to stack blocks together like Lego. A block can be a text, an image, a file, a web page, a formula, a database, a chart, a set of numbers, etc.

For project management, the typical process before Notion was to build yourself a complex Excel sheet or purchase an enterprise software license and stick with it regardless. With the capabilities offered by Notion, the process became building your ideal project management software using blocks. Furthermore, it changes the way you think, because now you look at a specific need in your project and ask "What can I build using the blocks offered by Notion?" instead of "Who should I ask to purchase that new software?" As a result, you unlocked the latent capability of building your own software.

the Notion softwares I built for course management, travel budget monitoring, and personal website

the Notion softwares I built for course management, travel budget monitoring, and personal website

An analysis of Coding Agents#

AI offers a distinct category of capabilities. In Engelbart's framework, explicit-human processes and explicit-artifact processes correspond to activities performed by humans or man-made objects. So in Engelbart's terms, AI is driving radical change precisely because we can swap cognitive processes, which are historically explicit-human processes, with explicit-artifact processes.

Having made over 3,000 commits across 30+ projects with AI since mid-2024, I'll restrict my discussion to the domain I know best: product building and software engineering. The capabilities offered by coding agents, composing code and comprehending code, are relatively low-level process capabilities in the process hierarchy of building; therefore, their impact on the software industry is enormous. This is well-known; I'll attempt to explain how and why.

Capability of “writing code” and its upward propagation#

Upward propagation is all about “how you’d do the original task differently”. I am simply unable to exhaust the useful possibilities here, so I’ll give a few examples.

To discuss upward propagation, we need a high level process in mind. Building products is one such process of writing code, and it can roughly decompose to: find a problem, talk to users, decide what to build, design the product, understand what are the tasks, prioritize the tasks, think of what to code, write down the code, iterate with debugger until it works, repeat.

Writing code was expensive because it takes years of training to master the art. It also takes hours, if not days, to write useful and/or performant code. Since the cost of writing code is high, you pick familiar frameworks, avoid ambitious refactors, keep scope small, and think very carefully about what features to add and how they impact the system architecture before you write. As a result, iteration speed was slow at best, and technical debt piles up.

Now with coding agents, the process hierarchy of building products became: find a problem, talk to users, decide what to build, agents to build and test, you steer agents, repeat. You might still need to take a look at the code to sanity check it, but you certainly don’t need to type any syntax if you don’t want to.

Writing code isn't just a subprocess of product development; it is also a subprocess of feature implementation, DevOps, documentation, data processing, debugging, frontend engineering, automation, research and experimentation, and so on.

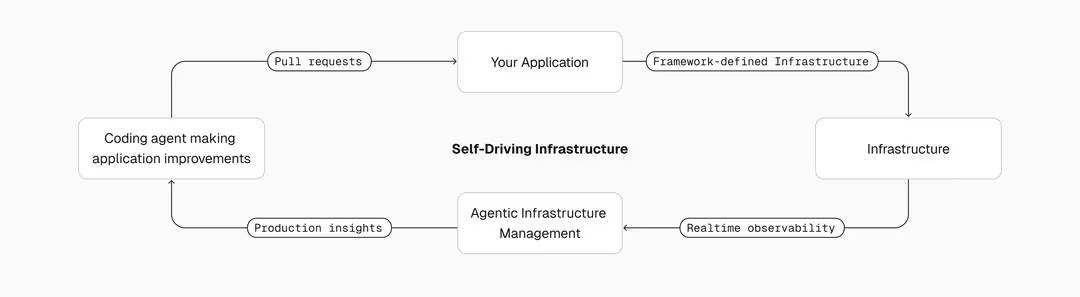

To give a few more examples of capabilities propagating upward: we can wire up self-improving software that automatically triages and fixes user-reported bugs (debugging), cron jobs that periodically update documentation from changes (documentation); As the reliability of coding agents increases, we start to manage fleets of agents instead of baby-sitting individual ones (feature implementation); you stop reading code and rely more on tests (debugging).

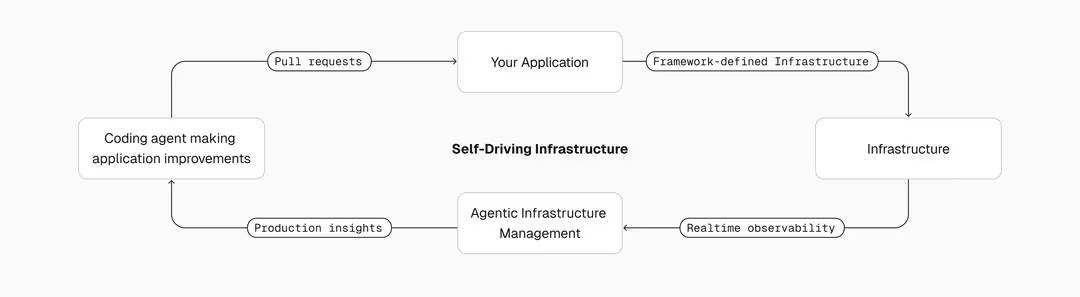

The production-to-code feedback loop, Vercel

The production-to-code feedback loop, Vercel

Downward propagation and latent capabilities#

Downward propagation is all about “what new things are you able to do beyond the original scope”. I am also unable to exhaust the useful possibilities here, so I’ll name a few.

For product building, since code became cheap, you can try prototyping something ambitious to see if the idea even works before investing in understanding every detail. Planning gets replaced with exploring; you generate something working, then react to it. In other words, you unlocked the capability to "explore multiple ideas simultaneously." And of course, designers now unlocked the capability to "realize coded designs."

For software engineering, you could always have read an unfamiliar codebase deeply when you first accessed it, and you could have always thought in terms of whole-system behavior rather than individual functions, but it wasn't really useful because it takes a lot of time to understand the full picture, and you could never act on system-level thinking in a reasonable timeframe. Now you get to comprehend the architecture quickly and can implement system-level changes fast. In other words, you've unlocked the capability to "comprehend code at scale" and "make system-level changes quickly."

You also get to unlock the capability to “write code in any language”, or technology-agnostic building. Learning a new language or framework was expensive, but now it doesn’t really matter as long as AI can build it for you.

You can now own “disposable software”. Previously, you'd never write a custom tool for a one-off task - the cost-benefit didn't justify it. Now you can generate a purpose-built script, use it once, and throw it away. This unlocks the capability to treat software as ephemeral and situational rather than as a durable asset that must justify its maintenance cost. One-time data migrations, bespoke analysis tools, single-use automation — these become routine.

You unlocked the capability to “launch cyber attacks” on your own infrastructure and so much more.

Thinking in another way#

With these new capabilities, we start to think about software differently. And we can see this happening at the industry level in how the language, artifacts and methodologies are evolving.

Notice how people who use AI and agents a lot start talking differently. They stopped talking about "writing a function" and instead talk about "prompting agents." They started to think less about "how to build" and more about "what to build and why." They started to doubt themselves less, asking "can I x?" less often, and instead asking "how do I x?"

"Prompting" was the word in 2023 and 2024, in which we "write detailed instructions for AI to follow." The art later became "context engineering," "steering," and "harnessing" in 2025. Artifacts such as “tools”, “skills”, “model context protocols” were invented accordingly. We invented the term "vibe-coding" to refer to AI-assisted coding in 2025; then, as the models' capabilities matured, we moved on to more serious "agentic engineering" in early 2026. "Orchestration" and "closing the loop" recently came into the spotlight as "the art of managing multiple agents effectively" and "how to let agents verify the results by themselves."

The nature of the intellectual work here is shifting, we started to think more in managerial terms instead of individual builder terms.

Old and new methodologies started to get applied in agentic-engineering, like spec-driven development, test-driven development, design-driven development etc.;

Per Engelbart's theory, all of this requires new training. For experienced engineers, they need to learn how to trust the model and how to orchestrate agents effectively. For AI-native engineers, they have to learn system design early to make leveraged decisions, as well as the core concepts and best practices in each software domain.

On a more general level, the way we think about "solving problems" is also changing. For any inconvenience, everyone will begin to think: "It would be nice if I could have software that solves this," instead of solving it manually. And it is for this exact reason that I believe software demand is going to increase unprecedentedly.

Notice how we were only talking about the impact from the capability of "writing and comprehending code." The capabilities AI offers are so much more than that, for example, it also has the capability to “write and comprehend sensible arguments”.

H-ALAM/T system#

Right now, the best way to boost human intelligence is to make AI smarter so it can help us. While we can think of AI purely as an artifact, we can also view it as an alien species with similar yet distinct cognitive capabilities and constraints that can be improved on a relatively short timescale. I think it would be useful to treat AI as a different dimension and study the H-ALAM/T system as an intellectual activity unit. A H-ALAM/T system refers to a trained group of humans together with their agents, language, artifacts, and methodology.

We already have early prototypes of them. In fact, the lot of words we used in the last section are the languages the industry uses to think about and improve LLM systems. We have external memory systems (artifacts) that allow AI to persist and update memory outside of their limited context window, just like how we need notebooks to extend our memory. We have tools that AI can use to take actions and gather data (artifacts). We have skills (methodologies) that AI can follow to solve specific known problems. So actually, we already have H-ALAM/T, and the system is recently known to start improving itself.

A more mature example I found in the software industry: Deepwiki MCP, which offers a "mental map of the codebase for agents," so agents who armed with it can better navigate the codebase without spending too much compute on codebase understanding. It’s an interface between agents and code, effectively making agents smarter by solving the same questions faster, with fewer tokens. If you are want to read more about this, you can find the well-respected Andrej Karpathy’s observations here.

The corollary is that when you build AI products today, it better allow AI to solve problems it couldn’t solve without it. In other words your product should be amplifying AI's intelligence to solve human problems. I currently believe it’s about building the optimal interface among agent operators, the problem, and human operators.

Closing#

Every innovation in tools has multiple orders of consequences. The first-order consequence is optimization: you do the same things faster. The second-order consequence is that your process hierarchy restructure: because a low-level capability improved, the way you do higher-level tasks changes entirely, with old steps disappearing and new ones emerging. The third-order consequence is that latent capabilities get unlocked: you find yourself able to do things that were technically possible before but never practical, and things that were entirely impossible. The fourth-order consequence is that your thinking changes: after living with these new capabilities, you start asking different questions, using different language, and approaching problems from a fundamentally different angle.

Engelbart's framework gives us the vocabulary to trace how a single capability innovation propagates through all four orders. This article applies that framework to modern tools like Heptabase and Notion, but the most exciting case is AI. No tool in history has offered this breadth of new capabilities while changing this fast, which means the second, third, and fourth-order consequences are still unfolding in real time, and we're only beginning to see what gets unlocked.

What a time to be alive!

註:以下中文版翻譯自英文。

引言#

每一項現代電腦使用體驗,像是圖形使用者介面、滑鼠操作、富文本編輯器,甚至是線上協作功能,都可以追溯到 Douglas Engelbart 在 1960 年代初期的哲學思想。這些都是經得起時間考驗(超過 60 年!)的偉大發明。

這些發明固然偉大,但它們僅僅是 Engelbart 願景的次要衍生物,而這些衍生物全都可以追溯到他在 1960 年發表的這篇論文:Augmenting Human Intellect: A Conceptual Framework。

這篇論文部分分析了人類如何解決問題,以及我們如何可以系統性地提升人類的智識能力。另一部分則研究了創新如何不僅改變我們做事的方式,還改變我們思考的方式以及我們能夠做到的事情。換言之,這篇論文從根本上探討的是發明,以及人類與其發明之間的正向回饋循環。

在這篇文章中,我會試著用現代的語言重新闡述這篇論文中一些最深刻的觀點,然後提供一些現代的例子,像是 Heptabase 和 Notion 如何幫助我們解鎖新的思考方式。接著,我會運用同樣的視角,嘗試描述 AI 如何影響軟體產業,以及如何幫助人類解鎖新的思維方式。最後,我會討論一個由Agent 增強的智識系統的概念,即 H-ALAM/T。

我目前認為 Engelbart 的框架是幫助我們理解 AI 對知識工作者的影響與潛力的最佳框架,但或許我們甚至可以將它推進一步,轉向一個以 AI 為中心的智識系統。

撰寫這篇文章的部分動機,是因為我注意到自己對人類能力的擴展感到非常興奮——也就是那些能在人的思考和行動層面帶來質變的工具,而非僅僅是效率上的量化提升。特別是最近我越來越注意,槓桿來自於在人類、軟體和 AI 之間建立正確的介面 (Interface)。因此這項研究是我試圖更好地理解自己、理解什麼與我產生深層共鳴,進而理解什麼值得去打造。

注:在這篇文章中,AI、Agent 和 LLM 是交替使用的。在此脈絡下,它們之間的差異並不重要。

H-LAM/T 系統#

隨著人口增長,社會變得更加複雜,問題也越來越大。人們很難獨自解決問題。

人類無法獨自解決困難的問題,但是當一群經過訓練 (T) 的人類 (H) 使用正確的抽象層思考 (L, langauge)、配備特製的人造工具 (A, artifacts)、採用正確的方法論 (M, methdology) 來思考時,他們就可以解決困難問題。這個複合系統就是 Engelbart 所稱的 H-LAM/T 系統。H-LAM/T 系統是處理問題的任何智識活動的基本單位。

如果我們把智能視為解決問題的能力,那麼一支筆就是一個「智能放大器」(Intelligence Amplifier),因為它讓我們能解決比沒有它時更多的問題。同理,像甘特圖 這樣的排程工具(人工製品)是一個智能放大器,「使用者故事」、「OKR」和「北極星指標」等術語(語言)也是如此,優先排序框架和利害關係人審查 (Stakeholder Reviews) 等實踐(方法論)亦然,還有多年與客戶交流和做取捨決策的經驗(訓練)也是。增強人類智識的研究就是對這些個別面向進行系統性改進的研究。

智能從何而來?要回答這個問題,我們可以看看電腦如何解決一個複雜的數學問題。在最上層,這個過程看起來很簡單:使用者輸入一個公式,然後收到答案。但在這之下存在著一個子流程的層級結構:透過一個演算法 以程式化的方式解題,這個演算法會逐步解析 、轉換和求值表達式。再往下是運行編譯器的流程,它將那些高階指令翻譯成機器碼——一系列原始運算,如比較、記憶體查找和算術運算。甚至再往下,每個運算都化約為邏輯閘 翻轉位元,最終由電子在矽晶片中流動的物理法則所支配。我們能說智能發生在某一層嗎?不能,因為每一層都只是對下一層的機械式轉換,單獨來看並不能解決我們的問題。只有當我們以有用的方式組織不同的層級,使得這個組織所產生的效果大於各部分之和時,我們的問題才會被解決。換句話說,智能來自於一個能夠解決特定問題的流程層級結構。

要真正解決一個特定問題,對於每個流程,我們需要一個能夠執行它的能力。例如,要解決 2×2 這個流程,我們需要的不僅僅是「做算術的能力」。我們需要能夠辨識符號的能力,需要理解「×」代表乘法的能力。我們需要知道乘法是什麼的能力——它是重複的加法。我們需要執行加法本身的能力,計算 2+2。我們還需要在記憶中保持數量的能力,這樣中間結果才不會丟失。每一項能力都對應於解題過程中的一個子流程,而沒有任何一項能力能單獨產生答案。由於一個複雜的問題可以被分解為一個流程層級結構,如果我們能找到一個能力層級結構 (Capability Hierarchy) 來執行對應的流程,那麼問題就可以被解決。

能力創新#

那麼,當某個特定能力發生創新時,會發生什麼?Engelbart 認為,它將「在一個人的能力層級結構的其餘部分產生深遠的影響」,因為一個變化可以向上傳播到那些使用它的更高層級能力,而那些更高層級能力的變化又可以向下傳播到其下方的能力,啟用潛在能力。一個能力的變化也可以重組整個流程樹。

一個簡單的例子是在寫作時「更正錯字的能力」。讓我們討論這項能力如何向上傳播以重組「寫一篇文章」的流程,以及它如何向下傳播以啟用「同時發展多個想法」的潛在能力。

使用傳統打字機時,更正錯字的流程大約是這樣:你在錯誤的地方塗一層薄薄的修正液,等幾分鐘讓它乾掉,在白色修正液上方繼續打字,然後(可能會)重新把整份東西再打一遍。有了輕鬆更正所寫內容的能力後,流程變成了:刪除錯字,重新打字。這個能力會向上傳播,改變你寫文章的流程,因為寫文章是一個使用「更正錯字」的更高層級流程。在擁有這個能力之前,寫文章的流程層級結構大致會是:用鉛筆在紙上草擬大綱、打出文章、修正錯誤、重新打一整遍、發表。現在,有了這個新能力,你的寫作流程可能變成:邊想邊打、在有新想法時改變結構、同時發展多個想法、挑選一個方向、精簡、修正錯誤、發表。流程層級結構的架構改變了。

解鎖新的想法#

工具的改變不僅改變我們做事的方式,也改變我們思考的方式以及我們能夠思考的內容。

注意「同時發展多個想法」和「在想法發展後進行精簡」的能力以前其實是不太可用的,因為修改的成本太高了。它們是被「更正錯字」的能力間接啟用的潛在能力。從低層級能力的向上和向下傳播有著許多可能性。

現代文字編輯器 vs 打字機

現代文字編輯器 vs 打字機

新沃爾夫假說與工具共同演化#

一個困難的問題通常是跨領域的,這意味著解決一個困難的問題通常需要大規模地綜合資訊和協作。一個困難問題的解決方案是一個組織良好的概念(心智)結構的自然產物,以符號結構(文字、圖表)來表達。

然而,任何一群人的智識活動受限於他們操作符號的能力。例如,如果書寫在物理上更加困難,那麼我們的文明就會產生更少的文字紀錄,我們也會發展出不同的概念、不同的符號系統、不同的語言和不同的社會結構。

如果我們能擁有的想法取決於我們操作概念和符號的能力,而我們操作符號的能力取決於我們的方法和工具,而我們創造工具的能力又取決於我們的想法,那麼這意味著:如果我們發明更好的工具,我們就能更好地操作符號;如果我們能更好地操作符號,我們就能擁有新的想法;如果我們能擁有新的想法,我們就能發明更好的工具。這是一個令人興奮的自我強化循環!Engelbart 相信,有些強大的想法只有在我們建造了更強大的語言、人工製品和方法論之後,才能被發現。

電腦是符號操作機器;它們能大規模且高效地操作符號結構。因此 Engelbart 主張,我們應該投資開發電腦輔助的工具和方法,並讓知識工作者熟悉這些系統。他進一步主張,我們應該從實驗這個自我強化循環是否真實開始。如果它是真實的,那麼我們應該 (1) 發明更好的工具來幫助我們更擅長於變得更好(發明工具),以及 (2) 應用自我改進的方法來幫助其他問題解決者解鎖新的思維方式和新的想法。

對現代生產力工具的分析#

從哲學上來說,Heptabase 和 Notion 作為產品,是 Engelbart 理念的繼承者。它們認真看待系統性改進 H-LAM/T 的想法,並賦予使用者過去無法擁有的能力,同時解鎖了新的思維方式。

我將嘗試簡單介紹它們提供的人工製品,然後讓你體會它們提供的能力、這些能力如何向上傳播改變你的高層級流程、解鎖了什麼潛在能力,以及最終它如何改變了我們的思維方式和可能解鎖的新想法。

讓我們從 Heptabase 開始。在最表面的層次,Heptabase 提供白板,讓你在上面操作卡片。一張卡片可以是一份文件、一則筆記、一段影片、一個數學公式、一張圖片、一個網頁、一段聊天對話等等。它提供了一系列的低層級能力,像是在不同白板中重複使用卡片、在畫布上重新排列卡片、將卡片嵌入另一張卡片中、在白板上用箭頭連接卡片、用日誌頁面來捕捉零散的想法等等。

Heptabase 試圖增強的高層級流程是理解複雜的主題,因此在我們檢視低層級能力如何向上傳播並改變你的學習方式之前,我們應該先檢視今天使用電腦或平板學習複雜主題的典型流程。你會在一邊打開學習材料,在另一個分頁打開筆記本。之後,你會閱讀材料、加一些筆記、重複、然後停下來。

以傳統方式做數位筆記

以傳統方式做數位筆記

在熟悉 Heptabase 之後,你會使用前面提到的能力,以下列子流程來學習:你會在一邊打開學習材料,在另一個分頁打開卡片建立器,之後你建立一則新筆記、將內容總結成一句話、重複,然後把所有卡片放在白板上、移動它們並用箭頭連接、重新措辭摘要、重複。換句話說,低層級的能力向上傳播並改變了你的學習流程。

從 12 堂講座系列中重新組織核心概念

從 12 堂講座系列中重新組織核心概念

注意,即使你過去其實可以 (1) 重新組織筆記以更好地反映知識的本質,以及 (2) 用總結的方式歸納你剛消化的知識,但在傳統的筆記方式中你幾乎從來不會這樣做,因為 (1) 很難用線性的形式反映知識的結構,而且 (2) 將摘要和內容分開並不直覺。換句話說,有了在白板上操作卡片的低層級能力,我們解鎖了以一種過去無法實現的方式來組織知識結構和綜合知識的潛在能力。

此外,由於卡片重複使用的能力,比較不同來源的想法的流程也變得容易了。你可以輕鬆地綜合來自不同來源的想法。

整合 11 篇 Paul Graham 關於「如何寫作」的文章中的想法

整合 11 篇 Paul Graham 關於「如何寫作」的文章中的想法

你開始以不同的方式思考。在習慣了綜合、組織和知識複用的概念之後,你在面對新想法和新知識領域時開始提出不同的問題。你不再問淺層的問題,如「有什麼重點?」和「有什麼有用的概念?」,而是開始提出更具脈絡性的問題。例如,對於一個新主題,你會開始問「在這個主題中,哪些脈絡是重要的?」;對於一個個別的新知識,你會問「這個新知識如何融入我現有的知識脈絡?」、「將這些新舊概念放在一起比較,我能學到什麼?」

當你熟悉 Notion 時,類似的事情也會發生。Notion 提供的根本能力是像樂高 (Lego) 一樣堆疊區塊 (Blocks) 的能力。一個區塊可以是一段文字、一張圖片、一個檔案、一個網頁、一個公式、一個資料庫、一個圖表、一組數字等等。

在專案管理方面,Notion 出現之前的典型流程是建立一個複雜的 Excel 試算表,或是購買企業軟體授權然後不管它多難用都繼續用它。有了 Notion 提供的能力後,流程變成了使用區塊來建造一個理想的專案管理軟體。此外,它改變了你的思維方式,因為現在你面對專案中的特定需求時會問「我可以用 Notion 提供的區塊來建造什麼?」而不是「我該找誰來購買那個新軟體?」因此,你解鎖了自己建造軟體的潛在能力。

我用 Notion 建造的課程管理、旅行預算監控和個人網站軟體

我用 Notion 建造的課程管理、旅行預算監控和個人網站軟體

對程式碼Agent的分析#

AI 提供了一類截然不同的能力。在 Engelbart 的框架中,顯性人類流程 (Explicit-Human Processes) 和顯性人工製品流程 (Explicit-Artifact Processes) 分別對應由人類或人造物體執行的活動。因此,用 Engelbart 的術語來說,AI 之所以正在驅動劇烈的變革,正是因為我們能將認知流程(這些歷史上一直是顯性人類流程的活動)替換為顯性人工製品流程。

自 2024 年中期以來,我已經在超過 30 個專案中用 AI 提交了超過 3,000 次 commit,因此我會將討論範圍限制在我最熟悉的領域:產品打造和軟體工程。Coding Agents 所提供的能力(編寫程式碼和理解程式碼)在產品打造的流程層級結構中是相對低層級的流程能力;因此,它們對軟體產業的影響是巨大的。這是眾所周知的;我會嘗試解釋其原因和方式。

「寫程式碼」的能力及其向上傳播#

向上傳播的核心在於「你會如何以不同的方式完成原本的任務」。我完全無法窮盡所有有用的可能性,所以我會舉幾個例子。

要討論向上傳播,我們需要一個高層級的流程作為對象。打造產品就是寫程式碼的一個這樣的高層級流程,它大致可以分解為:找到一個問題、與使用者交談、決定要建造什麼、設計產品、了解有哪些任務、排列任務的優先順序、思考要寫什麼程式碼、寫下程式碼、用 Debugger 反覆除錯直到可以運作、重複。

寫程式碼過去是昂貴的,因為需要多年的訓練才能精通這門技藝。寫出有用且/或高效能的程式碼也需要數小時甚至數天。由於寫程式碼的成本很高,你會選擇熟悉的框架、避免大膽的重構、保持範圍小,並且在寫程式碼之前非常仔細地思考要加入什麼功能以及它們如何影響系統架構。結果就是,迭代速度充其量只能說是緩慢的,而且技術債不斷累積。

現在有了 coding agent,打造產品的流程層級結構變成了:找到一個問題、與使用者交談、決定要建造什麼、讓 agent 來建造和測試、你引導 (steer) agent、重複。

寫程式碼不僅僅是產品開發的子流程;它也是功能實作、DevOps、維護文件撰寫、資料處理、除錯、前端工程、自動化、研究和實驗等等的子流程。

再舉幾個能力向上傳播的例子:我們可以建構能自動分類並修復使用者回報的錯誤的自我改進軟體(除錯);能定期從變更中更新文件的排程任務 (Cron Jobs)(文件撰寫);隨著 coding 可靠度提升,我們開始管理 agent 團隊而非照顧個別 agent(功能實作);你不再逐行閱讀程式碼,而是更加依賴測試(除錯)。

從生產環境到程式碼的回饋循環,Vercel

從生產環境到程式碼的回饋循環,Vercel

向下傳播與潛在能力#

向下傳播的核心在於「在原本的範圍之外,你還能做什麼新的事情」。我同樣無法窮盡所有有用的可能性,所以我僅會列舉幾個。

在產品打造方面,由於程式碼變得廉價,你可以嘗試做一個大膽的原型 (Prototype) 來看看這個想法是否可行,而不需要先投入時間去理解每一個細節。規劃被探索取代,因為你先生成一個可以運作的東西,然後對它做出反應。換句話說,你解鎖了「同時探索多個想法」的能力。當然,設計師現在也解鎖了「實現程式碼化設計」的能力。

在軟體工程方面,你過去其實一直都可以在第一次接觸一個不熟悉的程式碼庫時就深入閱讀它,你也一直都可以從整體系統行為的角度來思考而非只看個別函式 (Function),但這些過去並不太實用,因為理解全貌需要大量時間,而且你永遠無法在合理的時間內對系統層級的思考採取行動。現在你可以快速理解架構,並且能快速實施系統層級的變更。換句話說,你解鎖了「大規模理解程式碼」和「快速進行系統層級變更」的能力。

你還解鎖了「用任何語言寫程式碼」的能力,也就是技術無關的建造 (Technology-Agnostic Building)。學習一門新語言或框架過去是昂貴的,但現在只要 AI 能替你建造,這就不再重要了。

你現在可以擁有「用完即丟的軟體」(Disposable Software)。以前,你永遠不會為一次性任務寫一個自訂工具——成本效益根本不划算。現在你可以生成一個專門用途的腳本 (Script),用一次就丟掉。這解鎖了將軟體視為短暫且情境式的能力,而非必須證明其維護成本合理性的持久資產。一次性的資料遷移、客製化的分析工具、一次性的自動化——這些都變成了日常作業。

你解鎖了對自己的基礎設施「發動網路攻擊」的能力,以及更多更多。

以另一種方式思考#

有了這些新能力,我們開始以不同的方式思考軟體。我們可以在產業層面看到這一點正在發生——語言、人工製品和方法論都在演化。

注意那些大量使用 AI 和 Agent 的人開始說話的方式不同了。他們不再談論「寫一個函式」,而是談論「提示Agent」(Prompting Agents)。他們開始較少思考「如何建造」,而更多思考「建造什麼以及為什麼」。他們開始較少懷疑自己,較少問「我能做 x 嗎?」,而是改問「我要怎麼做 x?」

「提示詞」(Prompting) 是 2023 和 2024 年的關鍵詞,當時我們「為 AI 撰寫詳細的指令來遵循」。後來這門技藝在 2025 年演變為「上下文工程」(Context Engineering)、「引導」(Steering) 和「駕馭」(Harnessing)。相應的人工製品如「工具」(Tools)、「技能」(Skills)、「模型上下文協定」(Model Context Protocols) 也被發明出來。我們在 2025 年發明了「氛圍程式設計」(Vibe-Coding) 一詞來指稱 AI 輔助程式設計;然後,隨著模型的能力成熟,我們在 2026 年初轉向更嚴肅的「Agentic Engineering」。Orchestration 和 Closing the Loop 最近也進入了聚光燈下,分別代表「有效管理多個Agent的技藝」和「如何讓Agent自行驗證結果」。

智識工作的本質正在轉變,我們開始更多地以管理者的角度思考,而非個人建造者的角度。

新舊方法論開始被應用於Agent工程中,例如規格驅動開發 (Spec-Driven Development)、測試驅動開發 (Test-Driven Development)、設計驅動開發 (Design-Driven Development) 等等。

根據 Engelbart 的理論,所有這些都需要新的訓練。對於有經驗的工程師,他們需要學習如何信任模型以及如何有效地編排 Agent。對於 AI 原生工程師,他們必須及早學習系統設計以做出有槓桿效應的決策,以及各軟體領域的核心概念和最佳實踐。

在更一般的層面上,我們思考「解決問題」的方式也在改變。對於任何不便之處,每個人都會開始想:「如果我能有一個軟體來解決這個問題就好了」,而不是手動去解決它。正是因為這個原因,我相信軟體需求將會空前地增長。

注:我們剛才只是在討論「寫程式碼和理解程式碼」這項能力所帶來的影響。AI 提供的能力遠不止於此,例如,它還具備「撰寫和理解合理論述」的底層級能力。

H-ALAM/T 系統#

現在,提升人類智能的最佳方式是讓人工智能變得更聰明,以便它能幫助我們。雖然我們可以將人工智能純粹視為一個人工製品,但我們也可以將其視為一個具有相似但不同認知能力和約束的外星物種,這些能力和約束可以在相對較短的時間內得到改進。我認為將人工智能視為一個不同的維度並將H-ALAM/T系統作為一個智力活動單位進行研究會很有用。H-ALAM/T系統是指由受過訓練的人類群體及其代理、語言、人工製品和方法組成的系統。

我們已經有了早期的原型。事實上,上一節我們使用的很多詞彙就是業界用來思考和改進 LLM 系統的語言。我們有外部記憶系統(人工製品),讓 AI 能在其有限的上下文視窗 (Context Window) 之外持久化和更新記憶,就像我們需要筆記本來擴展我們的記憶一樣。我們有 AI 可以用來採取行動和收集資料的工具(人工製品)。我們有 AI 可以遵循來解決特定已知問題的技能(方法論)。所以實際上,我們已經擁有了 H-ALAM/T,而且這個系統最近已知開始自我改進。

我在軟體產業中找到一個更成熟的例子:Deepwiki MCP,它為 Agent 提供了「程式碼庫的心智地圖」(Mental Map of the Codebase),因此配備了它的 Agent 可以更好地瀏覽程式碼庫,而不需要在理解程式碼庫上花費太多運算資源。它是Agent與程式碼之間的介面,透過以更少的 token 更快地回答相同的問題,有效地讓Agent變得更聰明。如果你想進一步閱讀相關內容,你可以在這裡找到廣受尊敬的 Andrej Karpathy 的觀察。

其推論是,當你今天建造 AI 產品時,最好能讓 AI 解決它在沒有你的產品的情況下無法解決的問題。換句話說,你的產品應該要放大 AI 的智能來解決人類的問題。我目前認為,關鍵在於在 Agent Operators、問題和人類操作者之間建立最佳的介面。

結語#

每一項工具的創新都有多重層次的後果。第一層後果是最佳化:你更快地做相同的事情。第二層後果是你的流程層級結構重組:因為一個低層級的能力提升了,你做高層級任務的方式完全改變,舊的步驟消失,新的步驟出現。第三層後果是潛在能力被解鎖:你發現自己能夠做那些技術上以前就可行但從來不實際的事情,以及那些完全不可能的事情。第四層後果是你的思維改變:在與這些新能力共處之後,你開始提出不同的問題、使用不同的語言、從根本不同的角度來處理問題。

Engelbart 的框架為我們提供了詞彙,來追蹤一項單一的能力創新如何在所有四個層次中傳播。這篇文章將該框架應用於現代工具如 Heptabase 和 Notion,但最令人興奮的案例是 AI。歷史上沒有任何工具提供過如此廣泛的新能力,同時變化速度又如此之快,這意味著第二、第三和第四層的後果仍在即時展開中,而我們才剛開始看到什麼將被解鎖。

生逢其時,何其有幸!

Subscribe to updates

Get notified when I publish new posts. No spam, unsubscribe anytime.

Or subscribe via RSS